Beyond Careful Using AI in 2026

It's February 2026 - I'm stopping all paid AI subscriptions and removing AI from my local machine. Recent security disclosures on have convinced me that the current generation of agentic AI systems—whether cloud-based or local—operates in a fundamentally broken security model.

It's February 2026 - I'm stopping all paid AI subscriptions and removing AI from my local machine. Recent security disclosures on have convinced me that the current generation of agentic AI systems—whether cloud-based or local—operates in a fundamentally broken security model. Until architecture changes, sandboxed cloud solutions are the only remotely reasonable option. Letʻs delve into the concerns:

At the 39th Chaos Communication Congress (39C3) in Hamburg, Germany, Signal President Meredith Whittaker and VP of Strategy and Global Affairs Udbhav Tiwari gave a presentation titled AI Agent, AI Spy. In it, they shared the many vulnerabilities and concerns they have about how agentic AI is being implemented, the very real threat it’s bringing.

Local Agents = Direct Malware Access Everything

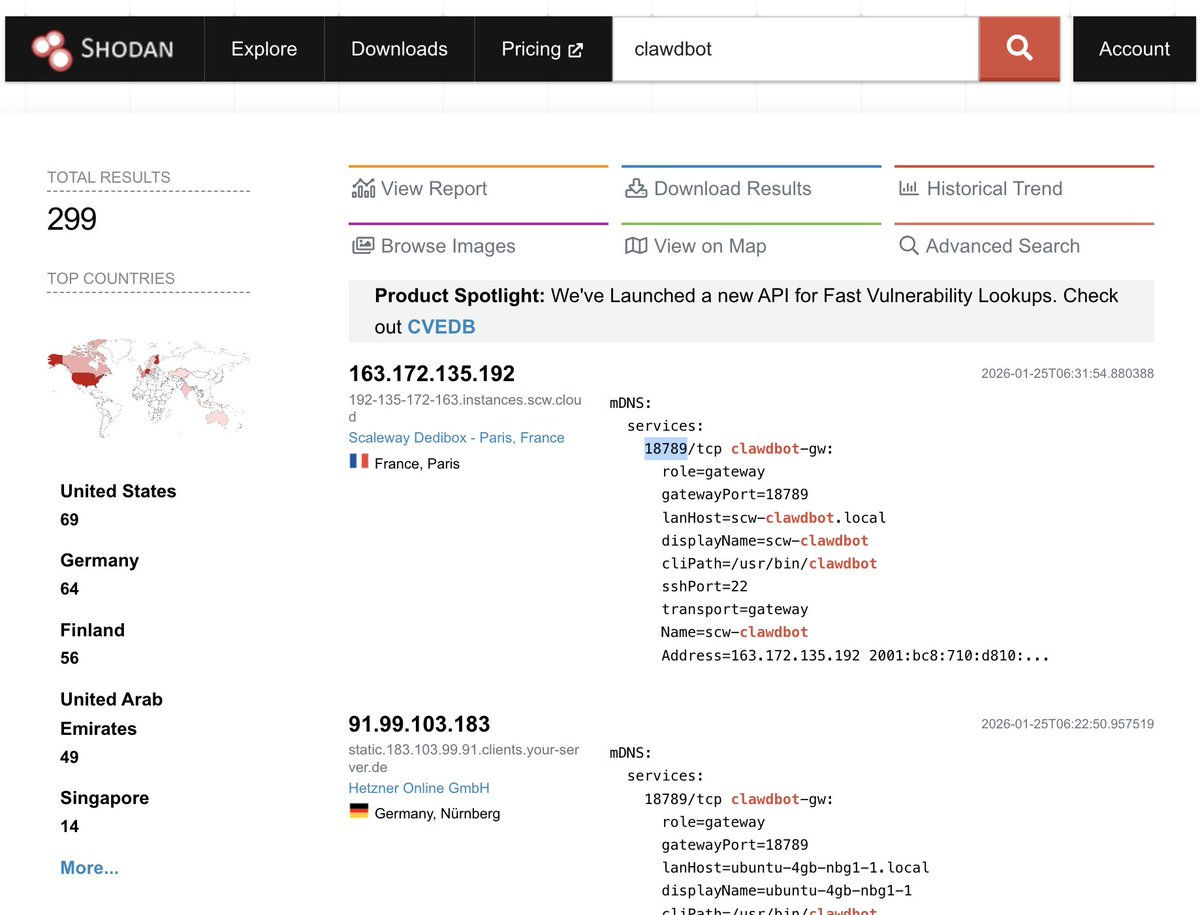

Local LM Studio instances operate with the same architectural flaws as ClawdBot (or whatever itʻs name is now), but with a crucial difference: they run on your actual machine with full access to your filesystem, your networks, your connected services.

If malware infects your machine—it gains immediate access to whatever your local AI agent can access. There's no network boundary. No cloud provider security team. No architecture designed to contain lateral movement.

Signal's research on Microsoft Recall exposes this perfectly. Recall creates a forensic database on your local machine: screenshots, OCR'd text, semantic analysis, complete timelines of everything you do. It's designed to give AI agents the context they need to act autonomously on your behalf.

But Tiwari pointed out the fatal flaw: malware and hidden prompt injection attacks gain direct access to this database, circumventing all end-to-end encryption entirely.

Signal had to implement a flag preventing its own app from being recorded. But Tiwari emphasized this is a band-aid, not a solution.

Why this matters: Local agents are an unnecessary attack surface. They provide zero advantages over cloud-based systems while introducing direct physical-machine compromise vectors. If your local agent is compromised, everything on your machine becomes an attack surface for the agent's attacker.

The Architecture Problem: Agentic AI Violates Fundamental Security Models

This is the core issue: we've designed autonomous AI systems in ways that contradict decades of security engineering best practices.

Traditional sandboxing assumes isolation. Operating systems enforce privilege boundaries. Applications run with restricted permissions. But agentic AI systems require:

- Continuous operation across multiple domains

- Broad access to diverse credentials and APIs

- Autonomy to initiate actions without explicit prompts

- Real-time awareness of your digital life

This isn't a configuration problem. It's not a bug. It's an architectural collision between what autonomous systems need and what security models can safely provide.

The ClawdBot research concluded: "Organizations must recognize AI agents as privileged infrastructure comparable to identity providers or secrets management requiring corresponding controls, monitoring, governance."

But that's the problem. We don't have those controls. We don't have auditing frameworks for AI agent behavior. We don't have practical secrets management for systems that need access to dozens of API keys. We don't have reliable detection of when an autonomous system has been compromised.

What This Means For AI in 2026

We need to be honest about where agentic AI currently stands: the architecture is fundamentally insecure and the reliability doesn't justify the risk.

My recommendations:

- Stop reckless deployment of agents until threat models are addressed

- Make opting out the default, with mandatory developer opt-ins

- Provide radical transparency about how systems work at the granular level

Sadly, the financial incentives point toward deployment at scale, security concerns will arrive after a major breach. Citizens will need to apply for regulatory pressure, or we'll need a catastrophic public breach to force the issue. I hope I am not in this breach - I maybe too late.

In the meantime, I'm voting no local agents on my personal machine with access to my files, folders and applications. No autonomous systems that require unfettered access to my digital life (e.g. social media). The technology isn't ready. The security models don't exist yet. The promises of productivity gains aren't worth the certain risks.

Stay Sandboxed

If you use AI systems, use them in ways that:

- Don't require autonomous operation on your personal machines

- Don't grant persistent access to your personal or sensitive infrastructure

- Run in environments the provider controls (cloud, not local)

- Maintain human verification at each critical decision point

- Keep your AI interactions as ephemeral and isolated as possible

The age of agentic AI might be coming. But the age of secure agentic AI isn't here yet. Until it is, sandboxed, stateless AI assistance is the only defensible position.

Everything else is gambling with your security.

Sources: The ClawdBot Vulnerability: How a Hyped AI Agent Became a Security Liability (HawkEye), AI Agent, AI Spy - 39C3 Presentation (Signal, via Coywolf)